Luca Zhou

About Me

I am a first-year PhD student in Computer Science at GLADIA, Sapienza University of Rome. Previously, I was an exchange student at the Chinese University of Hong Kong (Shenzhen) and a visiting graduate researcher at UC San Diego.

My research primarily focuses on post-training methods and modular deep learning, aiming to make large models more adaptable and scalable for tasks such as multitask learning, zero-shot generalization, and efficient model construction. In parallel, I am also passionate about multimodal language models, time series analysis, and especially their intersection.

Outside the lab, I stay active through sports and explore my creativity through photography and music. I’m always happy to collaborate, exchange ideas, or just have a chat. Feel free to reach out!

Research Interests

- Modular Deep Learning

- Model Merging

- Multitask Learning

- Multimodal Language Models

- Time Series Analysis

Publications

-

-

Breaking the Monolith Workshop @ICIAP 2025

Breaking the Monolith Workshop @ICIAP 2025

Selected Projects

News

-

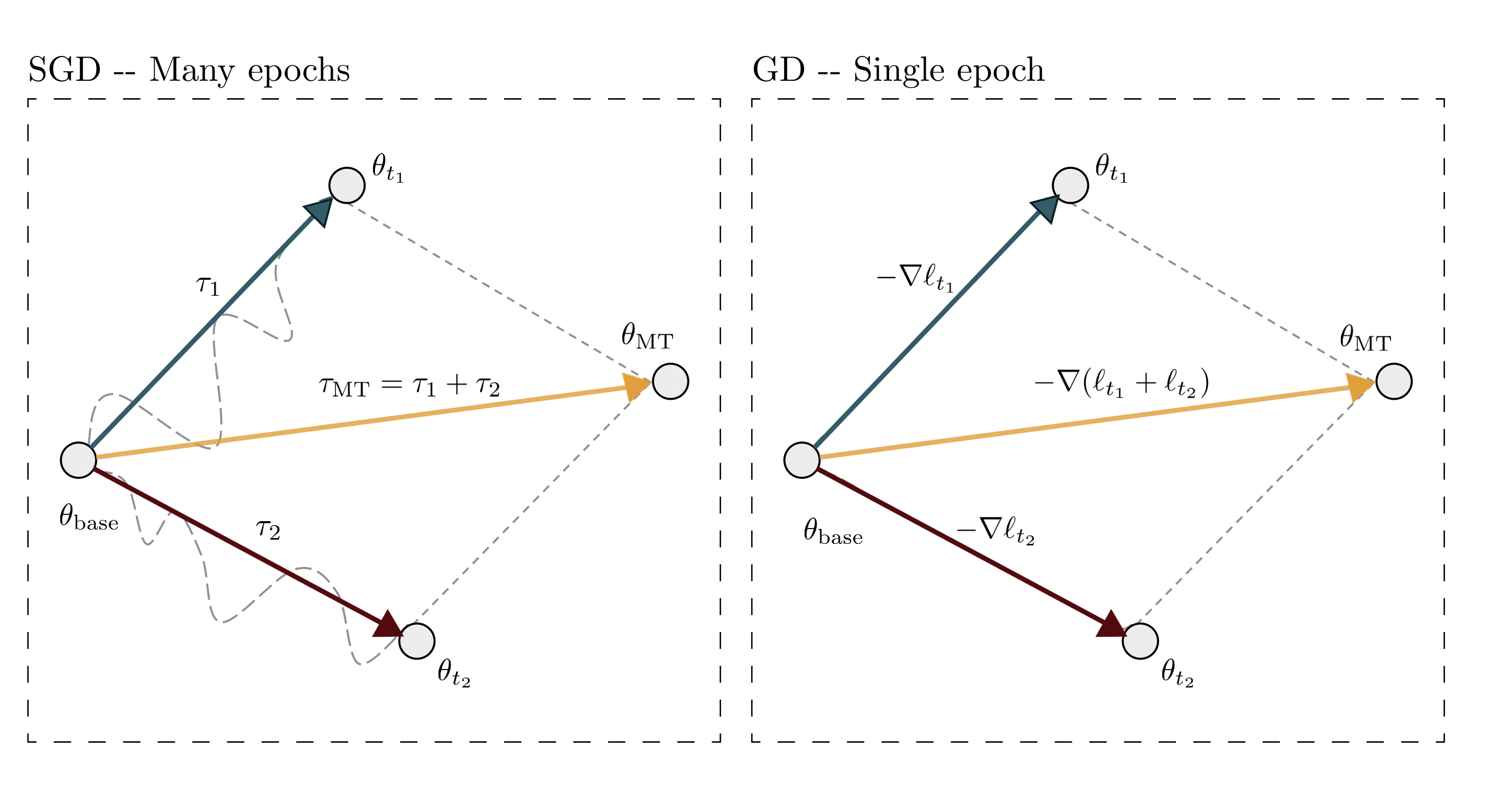

[12-2025] 👨🏻🏫 I’ll be presenting “On Task Vectors and Gradients” at UniReps @NeurIPS 2025 on December 6 in San Diego, feel free to connect if you attend!

-

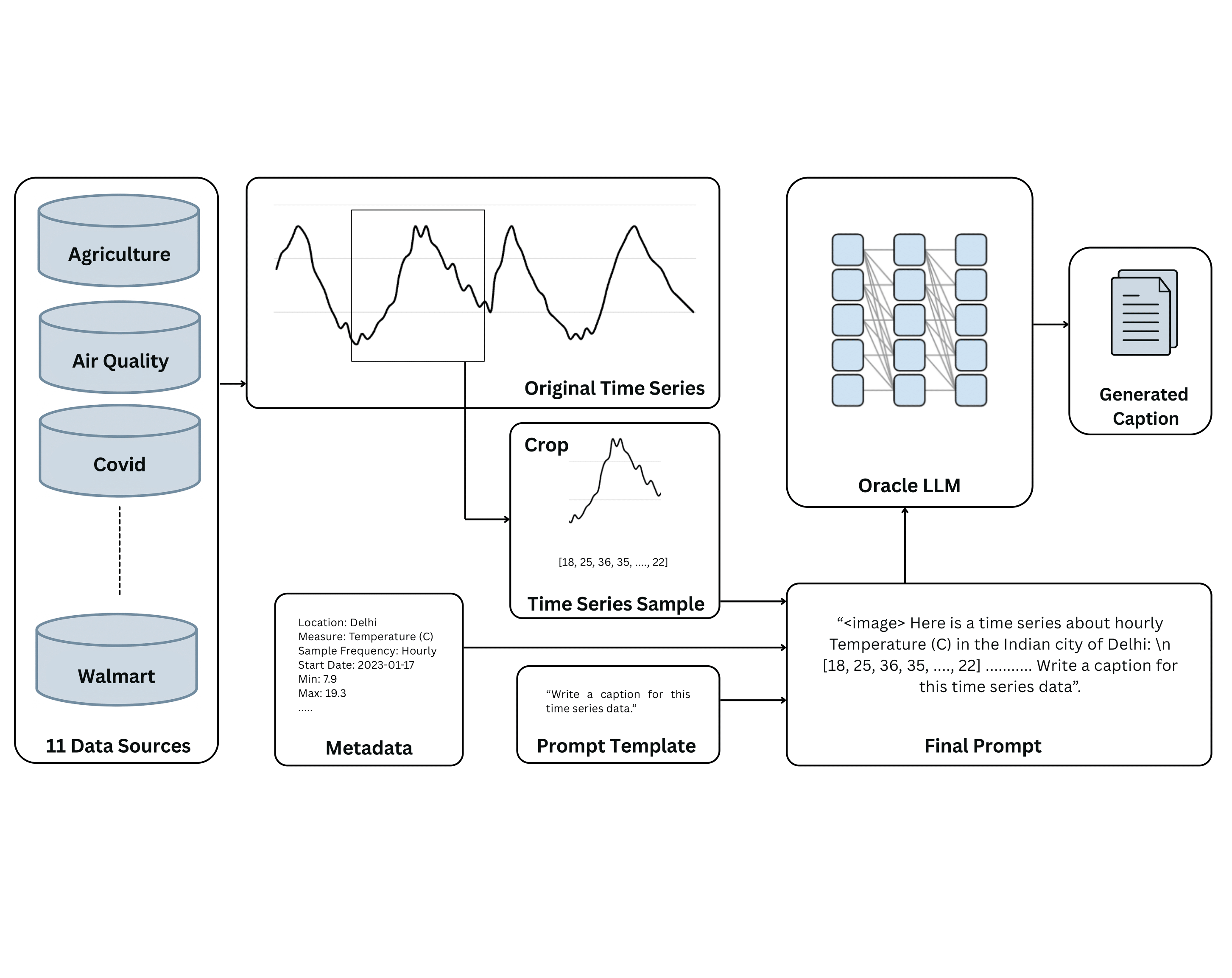

[09-2025] 📜 “CaTS-Bench: Can Language Models Describe Numeric Time Series?”, our multimodal time series captioning benchmark paper is out as a preprint here!

-

[09-2025] 🎉 Excited to share that our paper “On Task Vectors and Gradients” will be presented at UniReps @NeurIPS 2025 in San Diego!

-

[08-2025] 📜 Our new paper “On Task Vectors and Gradients” explores the link between task vectors and gradients, and is available as a preprint here!

-

[07-2025] 🎉 I graduated with an MSc from Sapienza University of Rome with honors! Check out my thesis here.

-

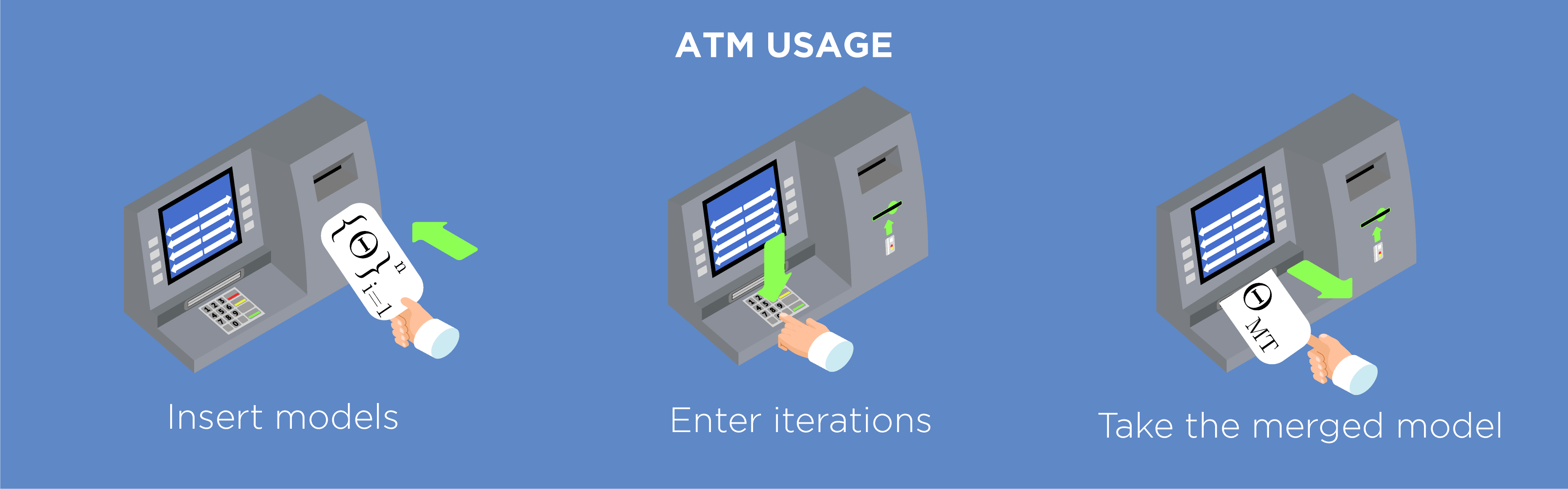

[07-2025] 🎉 Our paper “ATM: Improving Model Merging by Alternating Tuning and Merging” has been accepted for presentation at the Breaking the Monolith: 1st ICIAP Workshop on Advances in Modular Deep Learning!

-

[06-2025] 🚀 I’ve started a research internship at Panasonic North America on LLM code generation, collaborating with Stanford University and ItalAI.

-

[03-2025] 🚀 I’m visiting the University of California San Diego as a visiting student researcher, working on a Multimodal Benchmark on Time Series Captioning & Understanding under the guidance of Prof. Rose Yu.

-

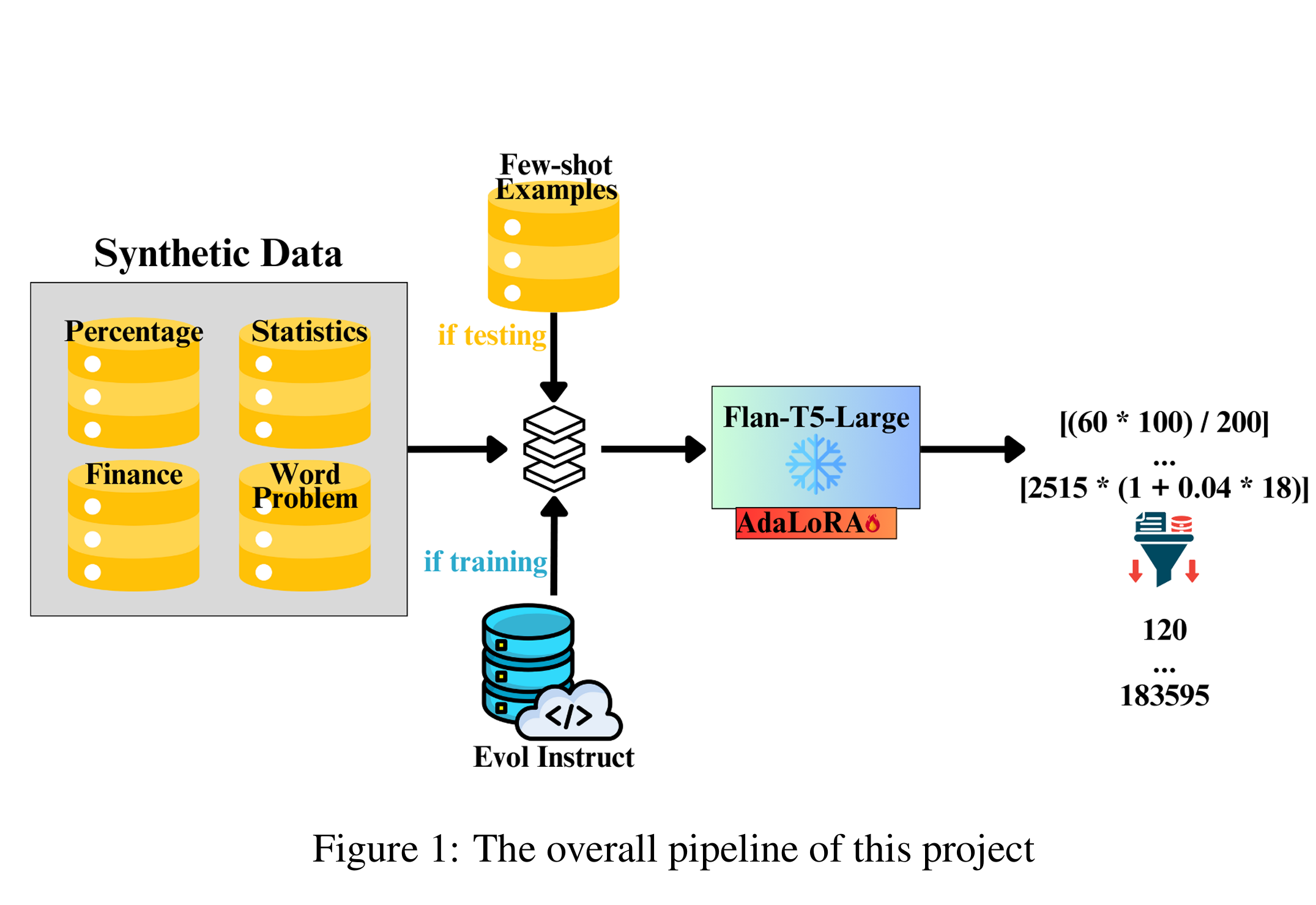

[11-2024] 📜 Our paper on multitask learning is available as a preprint here!